Microsoft & OpenAI: $2 Million To Fight Deepfakes

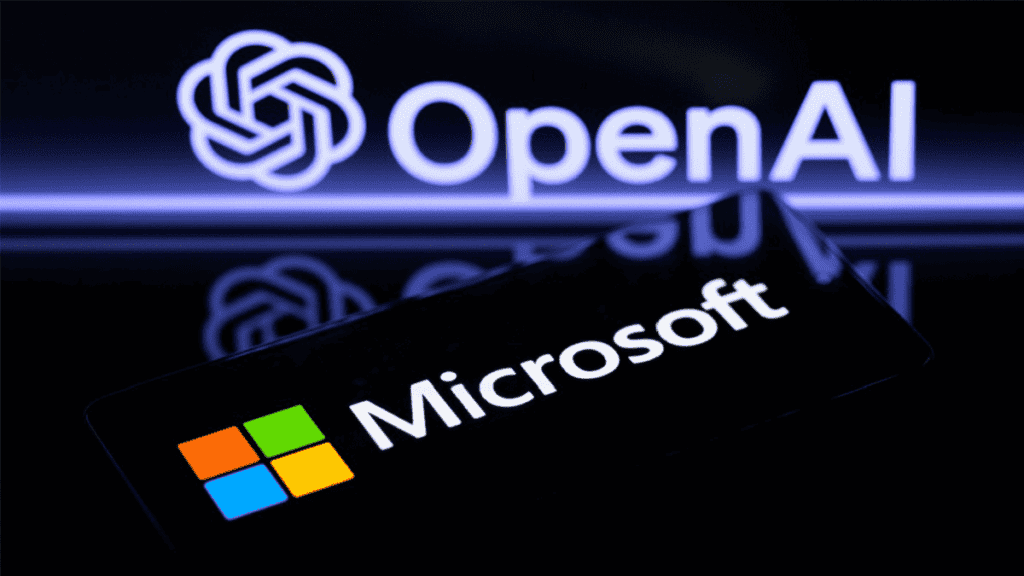

Microsoft and OpenAI have announced a $2 million “societal resilience fund” to address the growing difficulties posed by AI and deepfakes in global elections. The development of generative AI technologies and their potential misuse in creating convincing but fake digital material caused big internet companies to take steps to defend electoral integrity.

The companies, including Microsoft and OpenAI, collaborated to develop a standard framework for fighting deepfakes that may mislead voters. In addition to these projects, OpenAI recently released a deepfake detector to identify fake content, expanding the tools available to combat disinformation.

According to Microsoft, these grants are meant to promote a greater understanding of AI and its capabilities throughout society. For example, OATS will utilize its grant to fund training programs for people over 50 in the United States, focusing on “foundational aspects of AI.”

According to TechCrunch, “The launch of the Societal Resilience Fund is just one step that represents Microsoft and OpenAI’s commitment to address the challenges and requirements in the AI knowledge and education space,” Teresa Hutson, Microsoft’s commercial VP for technology and commercial responsibility. “Microsoft and OpenAI will remain devoted to this work, and we will continue to unite with associations and enterprises that partake in our pretensions and values.” (TechCrunch, n.d.)

AI vs. AI: Fighting Deepfakes

Therefore, the growing threat of deepfakes demands a new approach. Microsoft and OpenAI, one of the leading tech companies, established the Societal Resilience Fund to empower citizens with the tool to fight deepfakes.

The table below details the key roles and the contributions of organizations and companies in the fight against deepfakes:

| Organization | Role in Societal Resilience Fund | Expertise |

| Microsoft & OpenAI | Leadership & Funding | Artificial Intelligence |

| Older Adults Technology Services (OATS) | Educate seniors on identifying deepfakes | AI Literacy & Senior Education |

| Coalition for Content Provenance and Authenticity (C2PA) | Establish standards for verifying online content authenticity | Content Authentication & Standards Development |

| International Institute for Democracy and Electoral Assistance (International IDEA) | Safeguard elections from manipulation | Electoral Security & Democratic Practices |

| Partnership on AI (PAI) | Develop best practices for responsible AI development | Ethical AI Development & Industry Collaboration |

This collaboration strengthens the uniqueness of each organization, making it a more informed and secure digital future. The Societal Resilience Fund offers a promising solution in the ongoing battle against deepfakes. The success of this project is not just the effort of these organizations but also being a responsible online citizen committed to digital literacy.

Microsoft & OpenAI: $2M to Stop Deepfakes

With education and user empowerment at its core, this innovative approach tackles the challenge of deepfakes. However, the success of such initiatives depends on a multifaceted approach. The table below shows the strengths and weaknesses of this project, offering insights into the potential and challenges of the Social Resilience Fund that many organizations and companies face.

| Strengths | Weaknesses |

| Comprehensive: It focuses on education and literacy. It empowers individuals to identify deepfakes. | Scalability and sustainability: There needs to be more than $2 Million to sustain long-term educational programs worldwide, particularly in countries far from cities and unable to reach educational aid. |

| Global Impact: Targets voters and vulnerable communities worldwide, preparing them for misinformation campaigns, especially during elections. | Measuring Effectiveness: Determining the impact of education in fighting against deepfakes. It may require innovative methods of evaluating the fund’s overall success and the influence of mitigating AI-generated misinformation. |

| Expert Collaboration: Supports established organizations like OATS, C2PA, International IDEA, and PAI. This organization aids vulnerable communities in becoming educated about content authentication and responsible AI development. | |

| Proactive Strategy: They provide citizens with knowledge before encountering deceitful content, fostering a more informed and resilient society. |

Truth Tech: Fighting Deepfakes

With an initial investment of $2 million, the fund supports organizations in delivering AI education and enhancing their understanding of AI capabilities. This investment is crucial because these organizations play an essential role and impact in achieving the fund’s goal:

Empowering Communities: This fund prioritizes vulnerable populations like senior citizens by providing grants to organizations like OATS, aiding them with the tools to identify and access credible resources.

Enhancing AI literacy: In promoting AI education, the fund aims to increase AI literacy among the general public. This empowers vulnerable citizens and voters to identify and counter the attack of deceitful AI content, fostering a more informed and discerning society.

Promoting Transparency: The fund supports projects like the Partnership on AI’s Synthetic Media Framework. This framework fosters transparency in AI development and aids the best practices for responsible AI use, mitigating the risk incorporated with AI-generated misinformation.

Global Awareness: International IDEA’s involvement in this project significantly expands its reach. Through global training programs, the initiative assists in raising public awareness about deepfakes. Furthermore, these programs empower electoral management bodies, civil society, and media actors. Fosters a more informed electorate, leading to strengthened democratic processes.

Beyond Deepfakes: The Future of Truth

Deepfakes, or hyper-realistic AI-generated videos, blur the line between fact and fantasy. While they can be entertaining for artistic expression and educational purposes, on the other hand, deepfakes can also cause significant harm, damaging reputations and even influencing elections.

Microsoft and OpenAI’s Societal Resilience Fund fight back through education rather than technology. Moreover, they aim to build a society capable of critically evaluating online content by educating people about AI and deepfakes.

This is more than simply preventing deepfakes, even though It is about creating a future in which everyone can understand the complexity of AI while holding those who abuse it responsibly.

The fight for truth in the digital age necessitates continuous work. Researching educational approaches and collaborating with technology, educators, and policy officials is critical. Furthermore, by empowering citizens and encouraging ethical AI development, we can ensure that truth stays at the heart of a thriving digital society.

Ethan Park holds a PhD in Artificial Intelligence, having conducted research in machine learning algorithms and natural language processing. With a strong foundation in mathematics and programming, Ethan is passionate about exploring the ethical implications and applications of AI.