Edge Computing is revolutionizing how ai technology operates across industries. This concept brings data processing closer to the source, reducing delays and improving efficiency. In today’s interconnected world, where intelligence and instant decision-making matter, Edge Computing stands as a vital innovation. It bridges the gap between cloud systems and real-time data processing, making computer systems faster and more responsive. Readers will explore its background, types, mechanisms, and applications that shape modern digital solutions. Moreover, they’ll understand how it supports emerging fields like Gen AI, automation, and robots. By the end of this article, you’ll see how it reshapes industries with smarter, faster, and more secure solutions designed for the future.

What is Edge Computing?

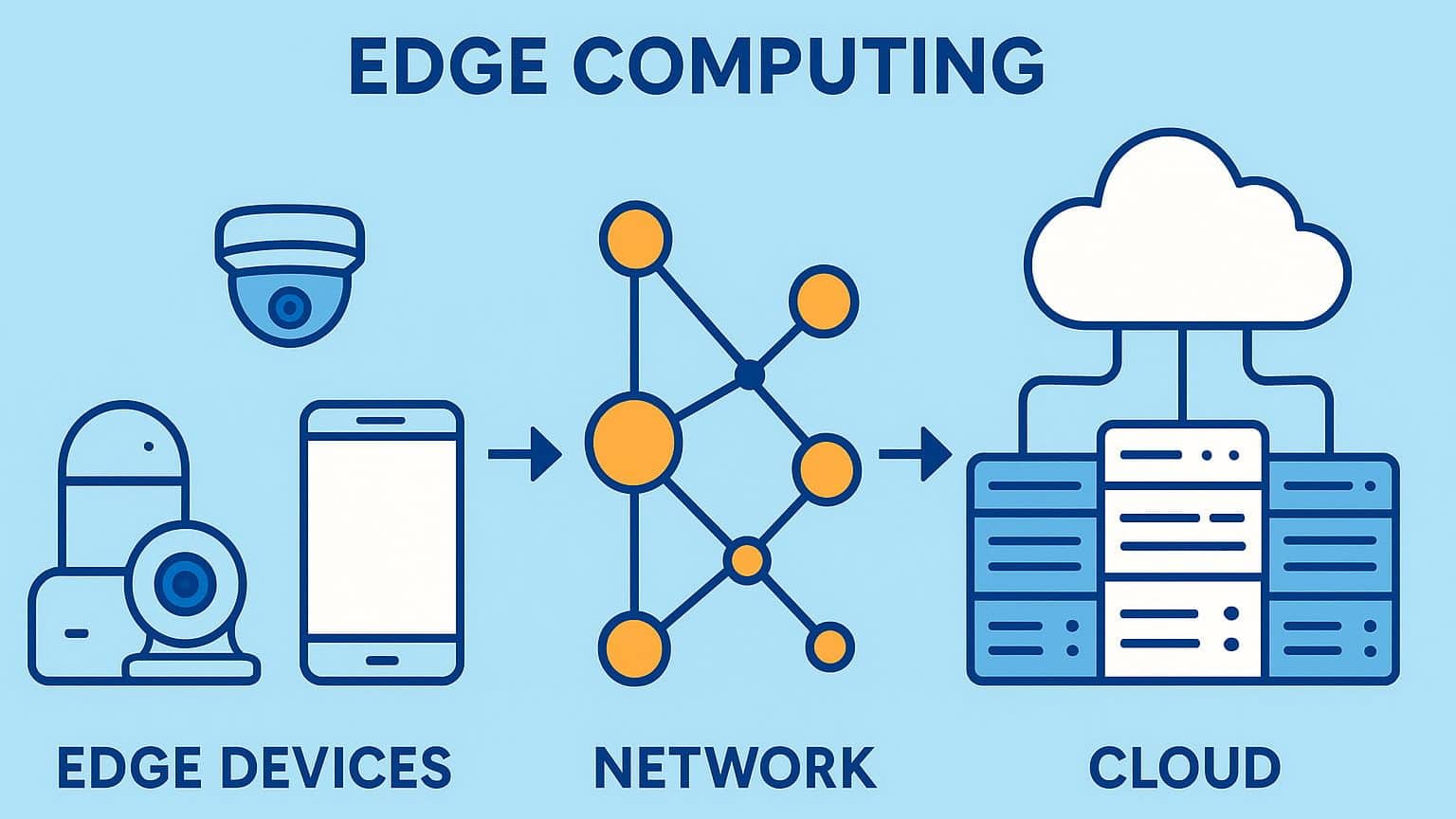

It refers to the practice of processing data near its source rather than relying solely on centralized cloud servers. Instead of sending all information to distant data centers, devices at the network’s “edge” handle tasks locally. This method drastically reduces latency and enhances real-time decision-making. The core purpose of the it is to improve performance, security, and reliability while minimizing bandwidth usage.

It fits perfectly into today’s fast-paced digital environment, where countless connected devices—such as sensors, mobile phones, and smart machines—generate continuous data streams. By analyzing information closer to where it’s created, Edge Computing empowers industries to act instantly. It complements cloud computing by handling immediate tasks locally while reserving complex operations for the cloud. As technology evolves, this approach supports innovations in AI, automation, and the Internet of Things (IoT), making modern infrastructure more adaptive and intelligent.

Background of Edge Computing

Edge Computing consists of several essential components that make it efficient and scalable. Each part contributes to faster data delivery and improved system reliability.

List of Key Components or Aspects:

Edge Devices

To begin with, edge devices serve as the foundation of edge computing systems. These include smart sensors, IoT machines, cameras, and mobile gadgets that both collect and process data locally. By handling computations at or near the source, rather than sending everything to distant servers, edge devices significantly reduce latency and network congestion. Consequently, this local processing enables faster decision-making, especially in real-time applications such as autonomous vehicles, industrial automation, and healthcare monitoring. In essence, edge devices make computing more responsive and efficient right where data is generated.

Edge Nodes

Furthermore, edge nodes play a crucial role in enhancing processing efficiency. Acting as mini data centers or localized servers, they are strategically positioned close to end users to handle immediate computing tasks. These nodes bridge the gap between individual edge devices and centralized cloud systems, performing complex operations such as data filtering, caching, and temporary storage. As a result, they decrease bandwidth usage and ensure quicker responses to user demands. In industries where real-time performance is vital—like logistics or remote monitoring—edge nodes provide the speed and reliability that centralized systems alone cannot offer.

Cloud Integration

Cloud integration ensures that edge computing systems maintain connectivity with centralized cloud infrastructures. While edge systems focus on rapid, local processing, the cloud complements this by managing large-scale analytics, data storage, and machine learning model updates. This combination forms a hybrid architecture where both systems work hand in hand. On one hand, edge computing boosts speed and autonomy; on the other, the cloud ensures scalability and long-term data management. Therefore, cloud integration allows organizations to balance agility with analytical depth.

Network Infrastructure

Equally important, the network infrastructure acts as the communication backbone linking edge devices, nodes, and cloud environments. It relies on technologies such as 5G, fiber optics, and advanced communication protocols to deliver fast and reliable connections. Moreover, a well-designed network ensures low latency, high throughput, and minimal data loss during transmission. This infrastructure not only keeps data synchronized across multiple systems but also supports continuous operation in dynamic environments. Consequently, it is indispensable for applications that demand real-time coordination, such as smart cities and industrial IoT ecosystems.

Security Layers

Finally, security layers provide the protective shield that keeps edge computing systems safe from cyber threats. Since data is processed and transmitted across multiple decentralized points, strong encryption, authentication, and access control measures are essential. Additionally, localized processing helps reduce the risks associated with large-scale data breaches, though it introduces new challenges at numerous endpoints. For this reason, multi-layered security protocols—including edge firewalls, anomaly detection, and secure data channels—are implemented to maintain confidentiality and integrity. Ultimately, effective security ensures user trust and the long-term sustainability of edge computing technologies.

These components work together to enhance user experience while maintaining security and speed. By distributing computing tasks strategically, it helps organizations build robust, intelligent networks capable of handling high-demand workloads efficiently.

History of Edge Computing

The computing was originated from the need to improve the performance of distributed networks in the early 2000s. As the number of connected devices grew, traditional cloud computing faced challenges with latency and bandwidth. To address this, companies began exploring localized data processing methods.

| Year | Milestone | Description |

|---|---|---|

| 2000 | Early CDN Development | Introduced content delivery networks that processed data closer to users. |

| 2010 | IoT Expansion | Massive growth in smart devices highlighted the need for faster data handling. |

| 2015 | Edge Term Coined | Tech leaders formalized the concept of processing data at the network’s edge. |

| 2020 | 5G Integration | High-speed networks accelerated Edge Computing adoption globally. |

| 2023 | AI and Automation Growth | Integration with Gen AI and robots expanded industrial and consumer uses. |

This evolution continues as industries adapt to real-time demands in automation, AI, and connected ecosystems.

Types of Edge Computing

Edge Computing can be categorized based on how and where it processes data. The main types include:

- Device Edge: Data is processed directly on local devices like smartphones or sensors.

- Network Edge: Tasks are handled at telecom towers or routers to reduce transmission delays.

- Cloud Edge: Combines cloud infrastructure with nearby edge servers for optimal balance.

- Industrial Edge: Used in manufacturing and automation for instant machine feedback.

- Mobile Edge: Common in 5G networks, providing ultra-low latency for smart applications.

Each type offers unique benefits depending on the environment. Together, they create a flexible computing ecosystem capable of adapting to the demands of modern digital infrastructures.

How Does Edge Computing Work?

It operates by processing data locally before sending it to centralized cloud servers. When a device generates data, nearby edge nodes analyze and filter relevant information instantly. Only essential data is transmitted to the cloud for long-term storage or complex processing.

This system reduces bandwidth use and speeds up response times. For example, in autonomous robots or smart cities, Edge Computing ensures immediate action without waiting for remote servers. Through this decentralized approach, it delivers reliable, real-time solutions that support the ever-growing demand for connected and intelligent technology.

Pros and Cons of Edge Computing

| Pros | Cons |

|---|---|

| Reduces latency and enhances real-time processing. | Requires higher upfront infrastructure costs. |

| Strengthens data security through localized control. | Complex management across multiple nodes. |

| Improves bandwidth efficiency. | Limited storage and computing capacity on local devices. |

| Enables offline operations when cloud access fails. | Security maintenance can be challenging in remote locations. |

Despite challenges, the advantages of Edge Computing make it an essential technology for modern businesses aiming for speed, safety, and scalability.

Uses of Edge Computing

It plays a crucial role in transforming industries worldwide.

- Healthcare: Real-time monitoring devices process patient data instantly for faster care.

- Manufacturing: Smart factories use edge systems to detect and fix issues automatically.

- Transportation: Self-driving cars rely on local data analysis to make instant decisions.

- Retail: Stores use edge devices to track inventory and enhance customer experience.

- AI and Robots: Autonomous systems leverage Edge Computing to operate with precision and minimal delay.

These diverse applications show how Edge Computing integrates seamlessly with today’s intelligent and connected world.

Resources

- ScienceDirect: Computer Networks

- Reversec: Network Security Testing

- Intel: How Edge Computing Works

- TechTarget. What is edge computing? Everything you need to know

- Cisco: Applications of Edge Computing